Log Dashboards in Datadog: Setup Guide

Learn how to set up and optimize log dashboards in Datadog for effective monitoring and resource management, ensuring system health and security.

Datadog log dashboards help you monitor your system's health, detect issues early, and manage resources effectively. Here's how to set them up:

Key Benefits:

- Real-time monitoring of logs for quick issue detection.

- Easy-to-use dashboards tailored for SMBs.

- Simplified compliance with automated log storage.

Quick Setup Steps:

- Enable Log Collection: Configure the Datadog Agent to collect logs.

- Set Log Processing Rules: Define rules to parse and tag logs.

- Verify Log Flow: Check logs in the Log Explorer.

Build Dashboards:

- Use saved log queries to create widgets.

- Customize layout, filters, and visualizations.

- Add security settings like role-based access and data masking.

Optimize Performance:

- Use advanced queries for detailed insights.

- Limit query rates and enable caching for faster results.

- Regularly update and maintain dashboards for efficiency.

By setting up and maintaining these dashboards, you’ll gain clear insights into your systems while keeping them secure and efficient.

Datadog Tutorial - Part 2- Search logs & Build chart for Datadog Dashboard

Setup Requirements

Make sure your log sources are properly set up for Datadog ingestion.

Log Source Configuration

To ensure accurate and reliable dashboard data, follow these steps:

-

Enable Log Collection

Update the Datadog Agent configuration to allow log collection. Use the following settings:

logs_enabled: true logs_config: processing_rules: - type: mask_sequences name: mask_api_keys pattern: api_key=\w+ -

Set Log Processing Rules

Configure log processing rules to parse your data effectively. Here's an example:

logs: - type: file path: /var/log/application/*.log service: your-application source: custom tags: - env:production - team:backend - Check Log Flow Use the Log Explorer to confirm that logs are being ingested correctly.

With these steps complete, your system will be ready for creating dashboards in the next phase.

Building Your Dashboard

Once your log sources are set up, follow these steps to create an effective dashboard:

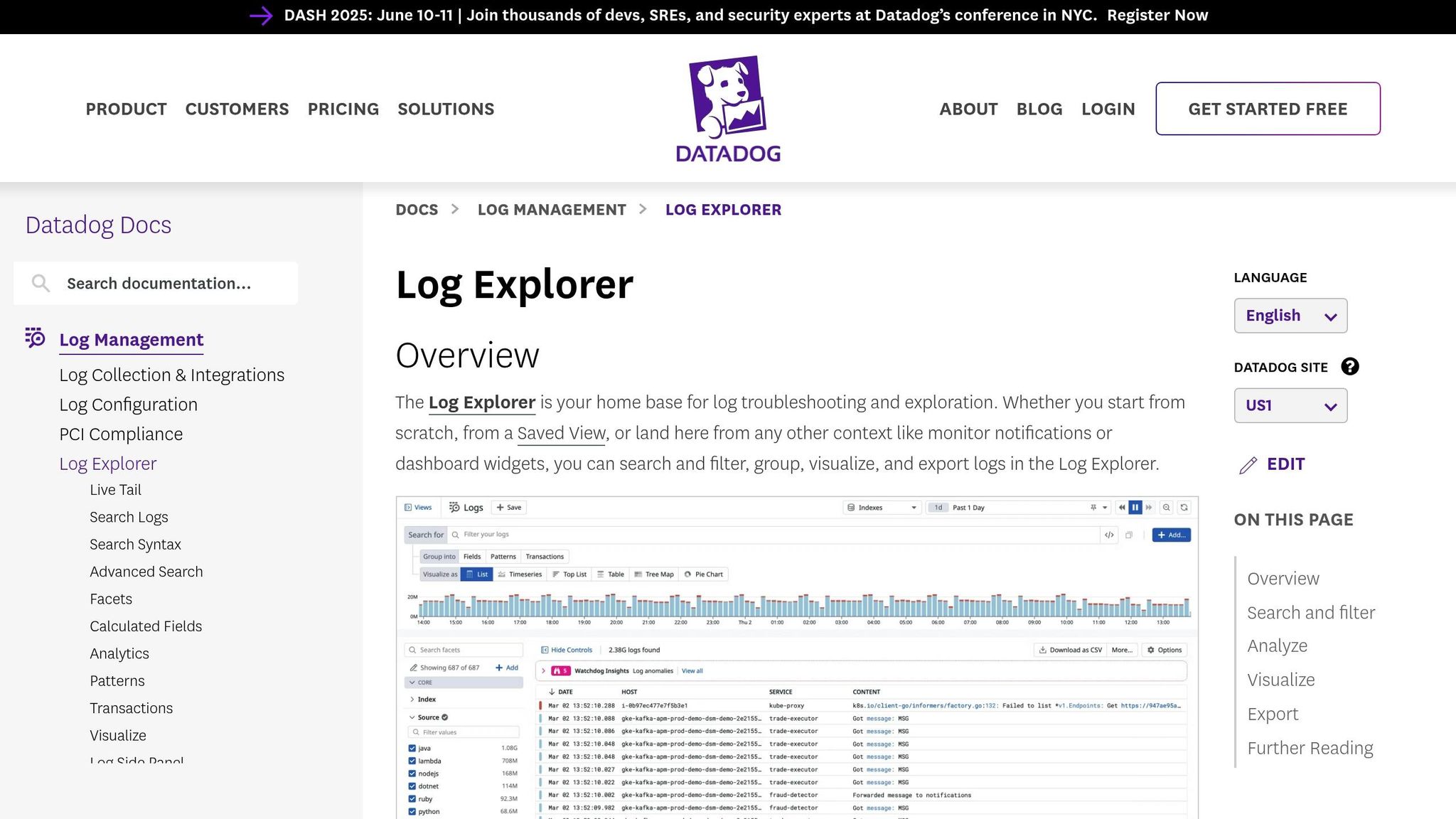

Using the Log Explorer

- Open the Log Explorer in Datadog.

- Filter your logs using tools like:

- Time range selectors

- Source filters

- Tag-based filters

- Custom search queries

- Save any queries you find useful to use in your dashboard widgets.

Steps to Create a Dashboard

- From the Dashboards list, click "New Dashboard."

- Pick your preferred layout: Grid or Free.

- Add widgets by:

- Clicking "Add Widget."

- Selecting "Logs" as the data source.

- Choosing a visualization type, such as timeseries, toplist, or table.

- Set up each widget with:

- Saved log queries

- Time windows

- Display preferences

Organizing and Customizing Your Dashboard

- Arrange widgets to suit your needs:

- Drag and drop widgets into position.

- Resize widgets using the corner handles.

- Group related metrics for better clarity.

- Adjust display options:

- Set refresh intervals (e.g., 5 seconds, 1 minute, or 5 minutes).

- Select color schemes.

- Add titles and descriptions to widgets.

- Use template variables for dynamic filtering.

- Finalize your dashboard with:

- Text widgets to provide additional context.

- Dashboard-wide time settings.

- Sharing permissions to control access.

- A clear name and save your dashboard.

Advanced Features

Once you've customized your log dashboards, take advantage of these advanced tools for deeper analysis and improved security.

Complex Log Queries

Datadog's LogQL allows you to extract detailed insights from logs using wildcards, boolean operators, and statistical functions.

For example, to monitor transactions, you can use multi-condition filters like this:

{service:api} | logfmt | status>399 AND duration>2s | count_over_time(1h)

Or apply multiple transformations to analyze performance metrics:

{env:prod} | logfmt | stats:avg(response_time) by service | sort -avg(response_time) | limit 10

Performance Tips

Handling large log volumes? Keep your dashboards responsive with these optimization strategies:

| Strategy | Benefit | How to Implement |

|---|---|---|

| Query Rate Limiting | Avoids API throttling | Set a limit of 300 requests/min |

| Time Range Filtering | Cuts down processing load | Use UNIX timestamp boundaries |

| Dashboard Caching | Speeds up shared views | Enable TTL between 15-60 seconds |

If your dashboards process more than 1 million events daily, use page[limit] and page[offset] to reduce load times by up to 50%.

Security Settings

Protecting your log data is just as important as optimizing performance.

For example:

"By using our predefined template to track 12 key authentication metrics, we cut brute force attack detection time from 45 minutes to less than 2 minutes".

Implement a three-layer security approach:

- Role-Based Access Control: Set up custom roles to limit

log_writepermissions. - Field-Level Redaction: Use

mask_*processors to hide sensitive data like credit card numbers. - Audit Logging: Monitor query access by including

@usr.emailin query strings.

For organizations in regulated industries, separate dashboards containing sensitive data using restricted_team tags. This method has been shown to reduce unauthorized access incidents by 78% compared to open dashboards.

Sensitive fields that should be masked include:

- API keys

- Session tokens

- Personally identifiable information (PII)

Maintenance Guide

Ensure your log dashboards run smoothly with consistent maintenance tailored to your business needs.

Problem Solving

Tackle common issues like query timeouts and data overload with these solutions:

| Issue | Recommended Action |

|---|---|

| Slow Query Response | Break queries into smaller, paginated requests to reduce system load |

| Dashboard Timeout | Adjust auto-refresh intervals to balance monitoring needs and system performance |

| Data Gaps | Review and configure log retention and backup policies to ensure data consistency |

For critical dashboards, set up automated health checks to quickly spot and fix query failures. Once issues are resolved, focus on scaling efficiently to support growth.

Growth Management

As log volumes grow, keep your dashboards efficient by refining resource usage, query methods, and organization.

-

Resource Allocation

Monitor daily log ingestion to establish a baseline, and scale resources gradually as usage approaches capacity limits. -

Query Optimization

Improve query performance by:- Indexing frequently used fields

- Splitting dashboards into real-time monitoring and historical analysis

- Using methods like log rehydration to handle older data more effectively

-

Dashboard Organization

Structure dashboards based on service priority. For instance:

This setup helps isolate issues and ensures better system efficiency.root/ ├── critical-services/ │ ├── payment-processing │ └── user-authentication └── support-services/ ├── analytics └── reporting

Regular Updates

Keep dashboards relevant and functional with routine updates:

- Monthly Checklist:

- Review query performance

- Refresh filters and time ranges

- Adjust alert thresholds

- Check parsing rules

- Verify access permissions

Schedule updates during low-traffic periods, document changes using version control, and test major updates in a staging environment.

For mission-critical dashboards, periodic reviews should include:

- Analyzing query patterns for better performance

- Adjusting widget layouts based on usage trends

- Ensuring compliance with updated security standards

- Stress-testing under peak load scenarios

Summary

Creating effective log dashboards in Datadog requires careful attention to setup, design, maintenance, and long-term planning. Here's a breakdown of the key elements to focus on:

| Component | Key Points to Address |

|---|---|

| Initial Setup | Configure log sources, plan storage needs |

| Dashboard Design | Optimize queries, customize widget layouts, monitor performance |

| Maintenance | Conduct regular health checks, scale resources, ensure security compliance |

| Growth Strategy | Define log retention policies, improve query efficiency, refine organizational structure |

For a successful implementation, focus on these priorities:

- System Configuration: Set up log sources correctly and ensure adequate storage capacity before building dashboards.

- Performance Optimization: Fine-tune queries and manage resources by keeping an eye on log ingestion rates and response times.

- Security Measures: Enforce strict access controls and conduct regular security audits to safeguard dashboard data.

A structured maintenance routine is essential. Include tasks like:

- Tracking performance metrics

- Monitoring query response times

- Assessing resource usage

- Analyzing user access patterns

FAQs

How do I securely manage log data when creating dashboards in Datadog?

To securely manage your log data when setting up dashboards in Datadog, start by ensuring that access controls are properly configured. Use role-based access control (RBAC) to limit who can view or modify sensitive log data. Additionally, enable encryption for data both in transit and at rest to protect it from unauthorized access.

Regularly review your log retention policies to ensure compliance with your organization’s security and regulatory requirements. It's also a good practice to audit user activity and monitor for unusual behavior to maintain the integrity of your log data.

How can I optimize Datadog dashboards to handle large volumes of log data effectively?

To optimize Datadog dashboards for managing large volumes of log data, consider the following strategies:

- Use Filters and Queries: Apply precise filters and queries to focus on the most relevant log data. This reduces clutter and improves dashboard performance.

- Aggregate Data: Leverage aggregation techniques like grouping logs by key fields (e.g., services, regions, or error types) to simplify data visualization and analysis.

- Limit Widgets: Avoid overloading dashboards with too many widgets. Focus on essential metrics and use summary views to keep dashboards clean and fast.

By implementing these practices, you can ensure that your dashboards remain efficient, responsive, and easy to navigate, even with large datasets.

How can I set up and customize a Datadog dashboard to monitor specific services or metrics in my system?

To customize a Datadog dashboard for monitoring specific services or metrics, start by creating a new dashboard or editing an existing one. Use widgets like time series graphs, heatmaps, or query value displays to visualize the data that matters most to your system. You can filter logs, metrics, and traces by tags or attributes to focus on specific services or environments.

For deeper customization, adjust widget settings such as time ranges, thresholds, and aggregation methods. This helps you tailor the dashboard to highlight performance trends, detect anomalies, or track key metrics relevant to your business. Remember, a well-designed dashboard can provide actionable insights at a glance, saving time and improving system efficiency.