Top 5 Datadog Features To Reduce Resource Usage

Explore five essential features that optimize resource usage and reduce costs for small and medium-sized businesses using cloud monitoring tools.

Managing resources effectively is critical for small and medium-sized businesses (SMBs) working with tight budgets. Datadog offers five key features that help reduce resource usage while keeping systems reliable:

- Anomaly Detection: Uses machine learning to identify unusual patterns in metrics like CPU, memory, and network activity. It helps prevent over-scaling and reduces manual monitoring efforts.

- Flex Logs: Lets you choose which logs to retain for analysis, cutting storage and processing costs.

- Custom Tagging: Organizes resources for targeted monitoring, making it easier to identify inefficiencies and manage costs.

- Rate Limiting & Sampling: Limits data ingestion and selectively records transactions, ensuring accurate monitoring while controlling expenses.

- Real-Time Dashboards: Centralizes data visualization, offloading processing to Datadog’s infrastructure to reduce local resource strain.

These tools are easy to set up, scale with your business, and help SMBs optimize their monitoring strategies without requiring a large team or extra infrastructure.

Optimizing Performance Without the Price Tag

1. Anomaly Detection for Resource Management

Datadog's anomaly detection leverages machine learning to keep an eye on critical metrics like CPU usage, memory, network activity, and application performance. By analyzing patterns over time, it identifies deviations from normal behavior and flags them for your team. This ensures you’re alerted to real issues without needing to comb through endless dashboards.

This approach not only saves time but also helps trim down costs by catching problems early and reducing unnecessary manual monitoring.

Resource Optimization Benefits

One standout advantage is its ability to pinpoint underutilized servers or overprovisioned instances. For example, if CPU usage consistently runs low, the system may suggest consolidating resources to improve efficiency.

It also tackles issues like memory leaks or runaway processes before they snowball into expensive problems. Early detection allows teams to address concerns during regular work hours, avoiding costly after-hours emergencies.

When paired with auto-scaling, anomaly detection distinguishes between genuine traffic surges and fleeting anomalies. This prevents over-scaling, which can lead to inflated bills.

Cost Savings Made Simple

Traditional monitoring often requires a dedicated team to watch dashboards around the clock - a luxury many small and medium-sized businesses (SMBs) can’t afford. Anomaly detection cuts through the noise, reducing false positives and highlighting only actionable concerns.

Its predictive capabilities also help spot performance trends that could lead to outages. By addressing these trends early, businesses can avoid downtime and the revenue losses that come with it.

Easy Setup for SMBs

Setting up anomaly detection is straightforward, requiring minimal configuration. The system automatically learns your infrastructure's baseline without needing deep technical expertise to define optimal ranges.

Pre-built templates simplify monitoring for common SMB scenarios, such as tracking e-commerce traffic, SaaS applications, or database performance. These templates eliminate much of the trial and error in setting up sensitivity levels and detection thresholds.

Clear visuals, like graphs showing normal ranges and flagged anomalies, make it easy for teams to understand what’s happening and take action - no specialized skills required.

Scalable and Adaptable

As your business grows, anomaly detection evolves with it. It adjusts to seasonal changes and different environments without requiring manual reconfiguration.

Custom sensitivity settings let you fine-tune alerts based on the importance of specific services. For example, customer-facing apps can have stricter monitoring to catch issues faster, while internal tools can have broader thresholds to avoid unnecessary alerts.

The system also works seamlessly across multiple environments - whether it’s development, staging, or production. It learns unique patterns for each, ensuring accurate monitoring without flooding your team with irrelevant alerts from non-production setups.

2. Flex Logs for Log Management

Datadog's Flex Logs feature streamlines log management by allowing small and medium-sized businesses (SMBs) to decide which logs to index for long-term analysis and which to use only for real-time monitoring. By separating log ingestion from log indexing, you gain the flexibility to store only the logs you need for deep analysis while keeping others available for immediate insights.

Making the Most of Resources

This approach helps reduce storage demands by directing logs to the appropriate processing level. For example, critical application logs can be indexed in detail for thorough analysis, while less vital logs can be temporarily retained. Smart sampling ensures you capture meaningful data without unnecessary duplication.

Cost-Friendly Solution

Flex Logs operates on a pay-per-use model, meaning your costs align with actual usage. By filtering out non-essential log data right at ingestion, you can cut down on both processing and storage expenses. Custom retention policies add another layer of cost control, tailoring storage to your specific needs.

Simple Setup for SMBs

Flex Logs offers a user-friendly visual configuration interface along with pre-configured templates for common logging scenarios. This makes it easy for team members, regardless of their technical expertise, to manage log processing without the need for major system overhauls. The setup adapts seamlessly to different workloads, maintaining efficiency across the board.

Built for Growth and Adaptability

Flex Logs can handle spikes in activity by using dynamic sampling and filtering to optimize resource usage during peak times. It also supports multiple environments - like development, staging, and production - ensuring your log management strategy remains effective no matter how your workload changes.

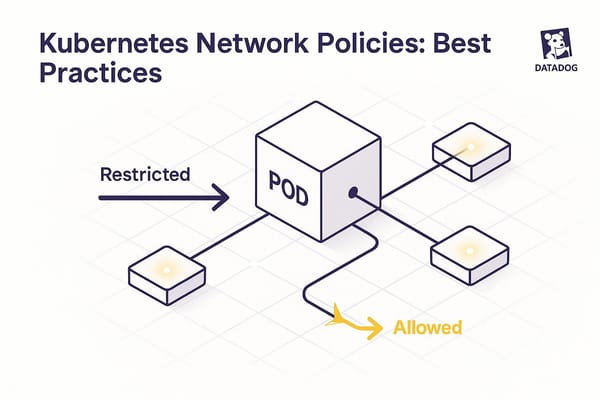

3. Custom Tagging and Resource Filtering

Datadog’s custom tagging takes resource monitoring to the next level by helping you organize your infrastructure in a way that makes sense for your business. By applying tags - like environment, team, application, or cost center - you can group and categorize resources effectively. This makes it easier to monitor specific areas and quickly spot opportunities for improvement.

Custom tagging integrates seamlessly with tools like anomaly detection and Flex Logs, allowing you to focus on particular subsets of your infrastructure rather than trying to monitor everything at once. This targeted monitoring approach cuts down on unnecessary noise in your data and makes it easier to pinpoint resource bottlenecks.

Resource Optimization Impact

Tagging isn’t just about organization - it’s a powerful tool for understanding how your resources are being used. When you tag resources by categories like environment (e.g., production, staging, development), application type, or business function, you gain clear insights into which areas are consuming the most CPU, memory, or network bandwidth.

Filtering capabilities let you zoom in on specific resource groups, so you’re not overwhelmed by irrelevant data. For instance, you can filter to view only production database servers during peak usage or isolate development resources that might be idling during off-hours. This level of granularity helps you make smarter decisions about scaling, optimizing configurations, or reallocating resources.

Custom tagging also enables conditional alerts tailored to specific tagged resources. Instead of receiving generic alerts for high CPU usage, you can configure them to trigger only when critical production systems exceed thresholds, while allowing more flexibility for non-critical environments like development.

Cost-Effectiveness

Tagging plays a key role in managing costs by giving you a clear picture of resource consumption. When resources are tagged by project, team, or environment, you can allocate costs more accurately and identify areas of overspending.

Tags also make it easier to set up automated cost controls. For example, you can create policies to automatically shut down non-essential development resources on weekends or scale back staging environments during periods of low activity. These automated actions, guided by tags, help reduce unnecessary expenses without manual intervention.

Ease of Implementation for SMBs

For small and medium-sized businesses, implementing custom tagging is straightforward. Datadog offers user-friendly interfaces for applying tags, whether manually through the web dashboard or programmatically via APIs and configuration management tools.

SMBs can start with a simple tagging approach, focusing on key categories like environment, application, and owner. As your business and monitoring needs expand, you can gradually introduce more detailed tags without disrupting your existing setup. Datadog’s system is flexible - you can modify or add tags to resources at any time.

The filtering interface is intuitive, using search patterns that most team members can quickly learn. You can even save frequently used filter combinations as bookmarks, making it easy for different teams to access the specific views they need. Plus, pre-built dashboard templates often include suggested tagging strategies, giving you a solid starting point.

Scalability and Flexibility

Custom tagging scales effortlessly as your business grows. Whether you’re adding new applications, services, or team members, you can extend your tagging structure without overhauling your monitoring strategy. The filtering system handles large volumes of tagged resources efficiently, ensuring fast search performance even as complexity increases.

As your needs evolve, you can adapt your tagging approach. You might begin by tagging resources by application and later expand to include dimensions like cost centers, compliance requirements, or geographic regions. Each new tag adds another layer of insight into your resource usage, helping you analyze data in more meaningful ways.

Advanced filtering options allow for complex queries that combine multiple tags with logical operators. This means you can easily create specific views, such as “all production web servers on the East Coast managed by the platform team,” without writing custom scripts or maintaining separate configurations. Datadog’s tagging and filtering capabilities grow with you, ensuring your monitoring system stays as dynamic as your business.

4. Rate Limiting and Sampling Controls

Datadog's rate limiting and sampling controls serve as intelligent filters, capturing essential data while keeping the overall data volume manageable. Rate limiting sets a cap on the number of data points, logs, or traces sent to Datadog within a specific time frame. Meanwhile, sampling selectively records every nth transaction instead of all, ensuring key insights are preserved while reducing the number of data points. Both approaches maintain statistical accuracy, making them reliable for monitoring and alerting.

Let’s dive into how these controls can optimize resources and manage costs effectively.

Resource Optimization Impact

Rate limiting plays a direct role in reducing resource usage by cutting down on the volume of data processed. This means less strain on CPU and bandwidth, which is especially beneficial for high-traffic applications. Intelligent sampling takes this a step further by minimizing data collection while still delivering reliable performance metrics.

During traffic surges, rate limiting ensures a balanced distribution of resource loads. Without such measures, sudden spikes in user activity could overwhelm your monitoring system, potentially affecting application performance. Acting as a safeguard, rate limiting ensures monitoring remains efficient and doesn’t interfere with your core services.

For small and medium-sized businesses (SMBs), these reductions in data collection translate to lower storage I/O and memory usage. This is particularly helpful for businesses with limited hardware resources or those looking to maximize the efficiency of their cloud instances.

Cost-Effectiveness

By reducing the amount of logs, metrics, and traces sent to Datadog, these controls directly lower monitoring expenses. Since Datadog's pricing is tied to the volume of data ingested, a well-thought-out sampling strategy can significantly cut costs - especially for high-traffic environments. Even during traffic spikes, you can maintain accurate error detection and performance monitoring without budget surprises.

Ease of Implementation for SMBs

Datadog makes it simple for SMBs to implement rate limiting and sampling controls with user-friendly configuration options. Many settings can be adjusted directly through the web interface, avoiding the need for complex code changes or deployments. You can start with conservative configurations and fine-tune them as your needs evolve.

To help you get started, Datadog provides pre-built sampling templates tailored to common application types, such as web services, APIs, and background processing systems. These templates offer a solid foundation that you can customize to meet your specific monitoring requirements.

Additionally, these controls integrate seamlessly with Datadog agents and SDKs. Enabling rate limiting often requires just a small tweak to configuration files or environment variables, making it easy to deploy across diverse environments. Changes take effect immediately, ensuring a smooth implementation process.

Datadog’s detailed documentation includes clear examples and best practices, guiding you through the optimization of rate limiting and sampling settings. This makes it easier to strike the right balance between comprehensive monitoring and cost control.

Scalability and Flexibility

As your business grows, rate limiting and sampling controls scale effortlessly to match increased traffic. These features maintain consistent data volumes and predictable costs without requiring manual adjustments. Sampling rates can even adapt automatically to traffic fluctuations, ensuring monitoring overhead aligns with actual usage.

Dynamic sampling is especially powerful. Under normal conditions, it captures a small fraction of transactions, but it can adjust to gather more detailed data when error rates rise or performance issues occur. This adaptive approach ensures you have the visibility you need during critical moments while keeping overall resource usage in check.

For businesses with evolving architectures, these controls also support advanced routing and filtering. You can set different rate limits for specific services, environments, or user groups, refining your monitoring strategy as your systems grow. Advanced users can even implement custom sampling logic tailored to unique business needs, ensuring monitoring efforts remain aligned with organizational goals.

5. Real-Time Dashboards with Data Processing

Real-time dashboards are the perfect complement to features like anomaly detection and rate limiting, rounding out your monitoring toolkit. With Datadog's real-time dashboards, raw data is transformed into meaningful insights, all while reducing the strain on your systems. By centralizing data visualization and processing, these dashboards eliminate the need for multiple specialized tools and offload heavy calculations to Datadog's infrastructure. This seamless integration with other Datadog features boosts overall system performance.

How They Optimize Resources

By handling data processing on Datadog's infrastructure, these dashboards free up your servers' CPU and memory. Instead of running numerous local monitoring queries, you get pre-processed, aggregated insights that make system monitoring simpler and more efficient. Additionally, consolidating multiple data streams into a single, unified view reduces the hassle of managing separate monitoring tools, saving time and resources.

Budget-Friendly Benefits

Centralizing data visualization and processing on one platform can significantly cut costs, especially for small and medium-sized businesses. Datadog's clear pay-per-use pricing model ensures you only pay for what you use, while flexible data retention policies allow you to manage storage costs by setting retention periods based on the importance of your metrics.

Faster incident resolution is another cost-saving benefit. By speeding up the process of identifying and fixing issues, these dashboards help reduce downtime and the associated operational expenses.

Simple Setup for SMBs

Datadog provides pre-built dashboard templates designed for common small and medium-sized business scenarios - like e-commerce sites, SaaS platforms, and web services. These templates offer immediate value without requiring complex configurations. The user-friendly drag-and-drop interface makes it easy for both technical and non-technical team members to access critical insights.

With one-click integrations, you can connect your dashboards to tools like Slack, PagerDuty, and email systems, streamlining alerting and notification workflows. Whether you're in the office or on the move, business owners and managers can monitor performance from any device, ensuring you're always in the loop.

Built to Grow with Your Needs

Datadog's real-time dashboards are designed to scale effortlessly with increasing data volumes and user demands. Role-based access controls allow you to customize visibility for different teams, making sure each department sees the metrics that matter most to them.

Advanced API integrations enhance dashboard functionality, enabling custom workflows and automated responses to meet changing operational needs. Whether you're monitoring development, staging, or production environments, these dashboards provide a unified view across your software lifecycle, supporting modern approaches like blue-green deployments and microservices architectures.

For more tips on making the most of these dashboards, check out the expert insights on Scaling with Datadog for SMBs (https://datadog.criticalcloud.ai).

Feature Comparison Table

Choosing the right monitoring solution for your SMB means understanding how traditional methods stack up against Datadog's efficient and scalable features. Here's a breakdown of the key differences:

| Monitoring Approach | Resource Impact | Cost-Saving Potential | SMB Scalability |

|---|---|---|---|

| Traditional Server Monitoring | Relies on local agents, often leading to high CPU and memory usage | Comes with hefty expenses from enterprise tools and added infrastructure | Requires dedicated IT staff and complex setups |

| Datadog Anomaly Detection | Reduces false alerts and cuts down on manual monitoring efforts | Saves time by streamlining incident response | Excellent – uses machine learning for automated scaling |

| Standard Log Management | Captures all logs, which can lead to high storage demands | Risk of unnecessary storage costs | Becomes costly as data volumes grow |

| Datadog Flex Logs | Focuses on processing only relevant logs, reducing storage needs significantly | Lowers log management expenses | Outstanding – pay only for the logs you actually need |

| Basic Tagging Systems | Requires manual tagging, slowing down troubleshooting | Can delay incident resolution | Moderate – harder to manage as infrastructure grows |

| Datadog Custom Tagging | Automatically organizes resources for faster filtering and troubleshooting | Cuts down troubleshooting time | Excellent – scales seamlessly with infrastructure |

| Generic Rate Limiting | Applies a one-size-fits-all approach, risking missed events during high traffic | Needs constant manual adjustments | Limited – requires ongoing maintenance |

| Datadog Rate Limiting & Sampling | Prioritizes critical data intelligently while maintaining visibility | Optimizes data ingestion costs | Superior – adapts to changing traffic patterns |

| Static Dashboard Tools | Heavy on local processing, with limited real-time capabilities | Often requires multiple tools, increasing costs | Poor – struggles to handle growing data volumes |

| Datadog Real-Time Dashboards | Offloads processing to the cloud, reducing local resource strain | Combines multiple tools into one efficient platform | Exceptional – built for large-scale data handling |

This table underscores how Datadog's modern features not only reduce resource strain and costs but also scale effortlessly to meet the needs of growing SMBs.

Conclusion

Managing resources efficiently is a cornerstone of SMB growth, and Datadog offers tools designed to make this easier. Features like anomaly detection automatically flag unusual patterns, while Flex Logs ensures you’re only paying for the log data you actually need. With custom tagging, your infrastructure becomes more organized and easier to search, and rate limiting with sampling controls helps keep data ingestion costs predictable without compromising visibility. Together, these capabilities create a monitoring system that’s both streamlined and scalable.

At the heart of it all are real-time dashboards, which provide instant insights into your infrastructure. These dashboards let you monitor your systems without overwhelming local resources, helping you reduce costs and focus on what matters most - your core business operations.

What’s more, these tools are automated, meaning even a small team can handle larger, more complex environments without significantly increasing operational demands. This unified approach highlights Datadog’s role in delivering monitoring solutions that are both cost-efficient and responsive, tailored to the needs of SMBs.

If you’re ready to optimize your monitoring strategy, check out the Scaling with Datadog for SMBs blog. It’s packed with expert advice and practical tips for small and medium-sized businesses aiming to get the most out of Datadog. You’ll find insights on improving cloud infrastructure performance, boosting system efficiency, and driving growth through smarter monitoring.

Start by focusing on one or two features that address your biggest resource challenges. As you see results, you can gradually expand your use of Datadog’s tools. The important thing is to take that first step toward a more scalable and cost-effective monitoring solution.

FAQs

How does Datadog's anomaly detection help optimize resource usage and prevent over-scaling?

Datadog's anomaly detection takes a close look at historical metrics to spot patterns and flag unusual activity as it happens. By identifying these deviations from the expected trends, teams can tackle potential issues early - preventing over-scaling or wasting resources.

This capability also plays a key role in predictive autoscaling, where resource allocation is adjusted dynamically based on real-time demand instead of relying on static thresholds. The result? Systems that stay efficient, avoid over-provisioning, and continue delivering reliable performance.

How does using Flex Logs help reduce costs compared to traditional log management?

Flex Logs helps businesses cut costs by splitting storage fees from query expenses, making it more affordable to store and analyze large amounts of log data. It provides a budget-friendly warm storage option tailored for high-volume datasets that are rarely queried. This ensures essential logs remain accessible without breaking the bank, allowing companies to optimize resources while staying efficient.

How does custom tagging in Datadog help SMBs improve resource monitoring and manage costs more effectively?

Custom tagging in Datadog allows small and medium-sized businesses (SMBs) to simplify resource monitoring and manage costs more effectively. By enabling detailed filtering and grouping of telemetry data, tagging makes it easier to spot inefficiencies, analyze usage trends, and identify areas where resources might be wasted.

With tags, SMBs can assign costs to specific departments or projects, establish budget limits, and maintain consistent tracking across various cloud environments. This approach provides clearer insights, optimizes resource usage, and helps keep costs under control.